Source: https://towardsdatascience.com/new-features-of-scikit-learn-fbbfe7652bfb

New Features of Scikit-Learn

An Overview of the Most Important Features in Version 0.24

Scikit-learn, Python’s machine-learning library, just got better. It’s the best time to update it.

Recently, in December 2020, scikit-learn released a major update in version 0.24. It is the last stable release of version 0. The next release will supposedly be version 1.0 and is currently in development.

I will provide an overview of some important features introduced in version 0.24. Given a large number of highlight features, I highly recommend upgrading your scikit-learn library.

Contents:

- Upgrading to Version 0.24

- Major Features

- Other Interesting Features

Upgrading to Version 0.24

I am using Python environments, so, first I activated my desired environment, and then upgraded the scikit-learn version via pip.

# Activate the environment

>>> source activate py38# Upgrade scikit-learn

>>> pip install — upgrade scikit-learn...

...

Successfully installed scikit-learn-0.24.0

Major Features

1) Sequential Feature Selector (SFS)

It is a way of selecting the most important features from high-dimensional spaces greedily. This transformer uses the model estimator’s cross-validation to iteratively find the best feature-subset by either adding or removing features via the forward and backward selection, respectively.

The forward selection starts with fewer features and gradually adds the best new features till the required number of features is obtained. The backward selection starts with more features and removes them one-by-one till the desired number of features is selected.

It is an alternative to the “SelectFromModel” (SFM) transformer. The advantage of SFS over SFM is that the estimator (model) used in SFS is not required to have the feature_importances_ or coef_ attribute after fitting, unlike in SFM. The disadvantage of SFS is that it is relatively slower than SFM due to its iterative nature and the k-fold CV scoring. The following is one example. Try playing around with the code using a different classifier.

from sklearn.datasets import load_wine

from sklearn.neighbors import KNeighborsClassifier

from sklearn.feature_selection import SequentialFeatureSelectorX, y = load_wine(return_X_y=True, as_frame=True)

n_features = 3model = KNeighborsClassifier(n_neighbors=3)

sfs = SequentialFeatureSelector(model,

n_features_to_select = n_features,

direction='forward') #Try 'backward'

sfs.fit(X, y)print("Top {} features selected by forward sequential selection:{}"\

.format(n_features, list(X.columns[sfs.get_support()])))# Top 3 features selected by forward sequential selection:

# ['alcohol', 'flavanoids', 'color_intensity']

2) Individual Conditional Expectation (ICE) plots

The ICE plots are a new kind of partial dependence plots that show how a prediction for a given sample in the dataset depends on a feature. If you have 100 samples (recordings) and six features (independent variables), then you will get six subplots, where each plot will contain 100 lines (you can specify this number). The y-axes in all subplots will cover the range of the target variable (prediction), while the x-axes will cover the corresponding feature’s range.

The following code generates the ICE plots shown below.

from sklearn.datasets import load_diabetes

from sklearn.ensemble import RandomForestRegressorX, y = load_diabetes(return_X_y=True, as_frame=True)

features = ['s1', 's2', 's3', 's4', 's5', 's6'] # features to plotmodel = RandomForestRegressor(n_estimators=10)

model.fit(X, y)ax = plot_partial_dependence(

model, X, features, kind="both", subsample=60,

line_kw={'color':'mediumseagreen'})ax.figure_.subplots_adjust(hspace=0.4, wspace=0.1) # adjust spacing

3) Successive Halving Estimators

Two new estimators for hyperparameter tuning are introduced namely HalvingGridSearchCV and HalvingRandomSearchCV. They can respectively serve as alternatives for GridSearchCV and RandomizedSearchCV, the in-built hyperparameter tuning methods so far in scikit-learn.

The basic idea is that the search for the best hyperparameter starts on a few samples with a large set of parameters. In the next iteration, the number of samples increase (by a factor n), but the number of parameters is halved/reduced (halved does not only mean a factor of 1/2 (n=2) but can also be 1/3 (n=3), 1/4 (n=4), and so on). This halving procedure continues till the final iteration. Finally, the best subset of parameters is the one that has the highest score in the last iteration.

Similar to the existing two methods, the “successive halving” can either be performed randomly (HavingRandomSearchCV) or exhaustively (HavingGridSearchCV). The following example demonstrates the use case of HavingRandomSearchCV (you can similarly use HavingGridSearchCV). For a comprehensive explanation of how to choose the best parameters, refer here.

from sklearn.datasets import load_iris

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import HalvingRandomSearchCVX, y = load_iris(return_X_y=True, as_frame=True)

model = RandomForestClassifier(random_state=123, n_jobs=-1)param_grid = {"n_estimators": [20, 50, 100],

"bootstrap": [True, False],

"criterion": ["gini", "entropy"],

"max_depth": [1, 2, 3],

"max_features": randint(1, 5),

"min_samples_split": randint(2, 9)

}grid = HalvingRandomSearchCV(estimator=model,

param_distributions=param_grid,

factor=2, random_state=123,)

grid.fit(X, y)

print(grid.best_params_)# Output

{'bootstrap': True, 'criterion': 'gini', 'max_depth': 2, 'max_features': 4, 'min_samples_split': 8, 'n_estimators': 50}

4) Semi-supervised Self Training Classifier

Scikit-learn has several classifiers for supervised learning. Now it is possible for these supervised classifiers to perform a semi-supervised classification, i.e. enabling the model to learn from unlabeled data. Only those classifiers that predict class probabilities for the target variable can be used as semi-supervised classifiers. The official example here provides a comparison of supervised and semi-supervised classification for the Iris dataset.

The following articles nicely explain the concept of semi-supervised learning:

5) Native categorical features in HistGradientBoosting

Both, the ‘HistGradientBoostingRegressor’ and the ‘HistGradientBoostingClassifier’ now can support categorical features that are non-ordered. Generally, one has to encode the categorical features using schemes such as One-Hot Encoding, Ordinal Labeling, etc. With the new native feature, one can directly specify the categorical columns (features) without needing to encode them.

The best part is that the missing values can be treated as a separate category. Typically, the One-Hot Encoding is applied to the training set. For a given feature (column), suppose the training set has six categories, and the test set has seven. In such a situation, the model trained on six additional columns (one extra feature per category value due to the One-Hot Encoding transformation) will throw an error during the validation on the test set due to the unknown 7th category. The native feature can circumvent this problem by considering this 7th unknown category as missing values.

Refer to this official example comparing different encoding schemes.

6) What NOT to do with scikit-learn

New documentation is added that focuses on the common pitfalls while machine learning using scikit-learn. Along with highlighting the common mistakes, it also explains the recommended ways to do certain things. Some examples covered in this documentation includes:

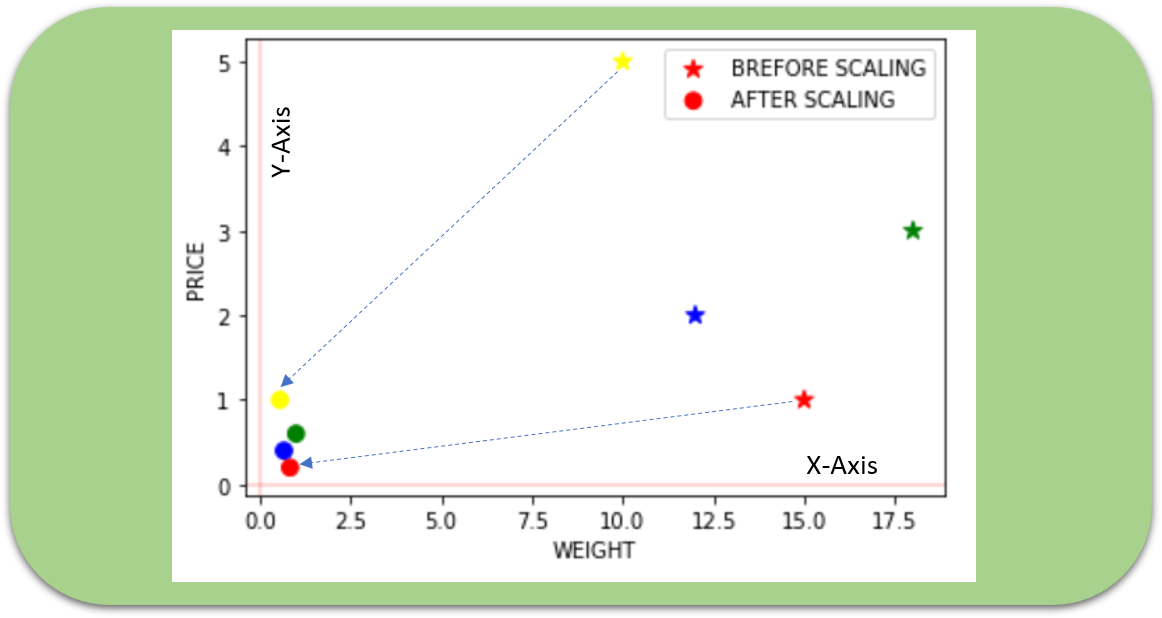

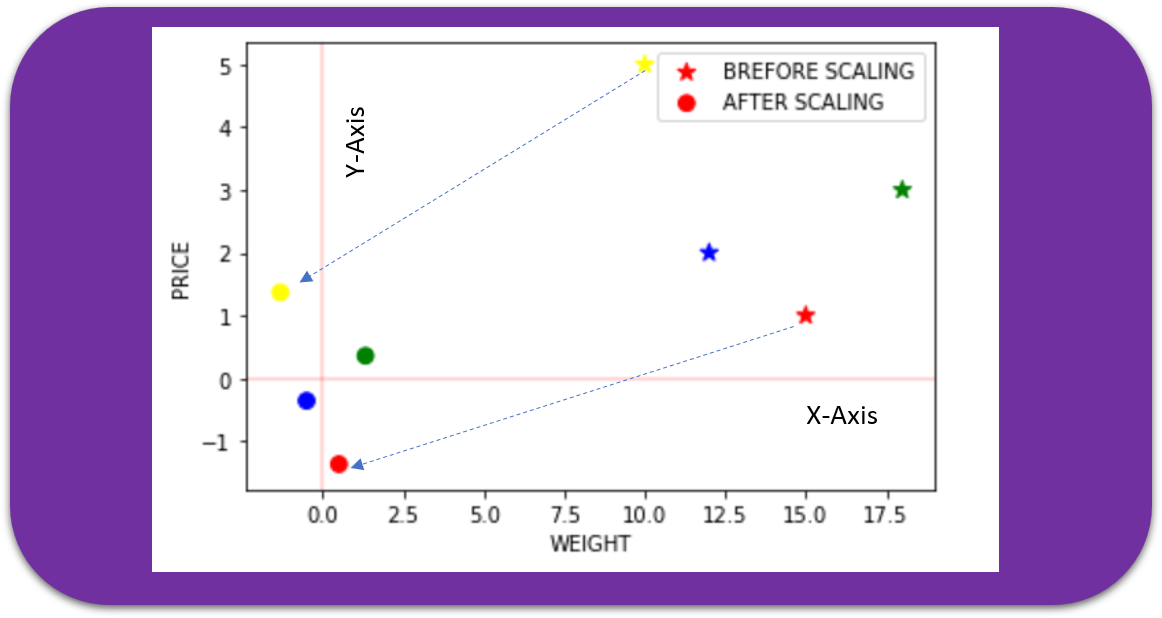

- Best practices for data transformation (e.g., standardization)

- The correct interpretation of the coefficients of linear models

- Avoiding data leakage by correct handling of train and test datasets

- Recommended use of the random state to control the randomness, e.g., during the k-fold CV

Additional Documentation

Typically, GridSearchCV is used to find the best subset of hyperparameters for our model. Based on our predefined performance scoring parameter (e.g., 'accuracy' or 'recall' or 'precision' etc.), we choose the best hyperparameter subset. This documentation provides an example demonstrating the correct way to compare different models in terms of their statistical significance.

Other Interesting Features

1) New evaluation metric for regression

Mean absolute percentage error (MAPE) is a new evaluation metric introduced for regression problems. This metric is insensitive to the global scaling of the target variable. It is a fair measure of the error in situations where the data ranges over several orders of magnitude by computing the relative percentage of error w.r.t true values. Refer to this page for more details.

2) One-Hot Encoder treats missing values as categories

The One-Hot Encoder can now treat the missing values in a categorical feature as an additional category. If the missing values in a particular categorical-feature are 'None' and 'np.nan', they will be encoded as two separate categories.

3) Encoding unknown categories using OrdinalEncoder

The OrdinalEncoder now allows encoding the unknown categories during transformation to a user-specified value.

4) Poisson splitting criterion for DecisionTreeRegressor

DecisionTreeRegressor now supports a new splitting criterion called 'poisson' that splits a node based on a reduction in Poisson’s deviance. It is helpful to model situations in which the target variable represents a count or a frequency. Refer to this article for more on Poisson distribution.

5) Optional color bar in confusion matrix plot

The color bar is now optional while plotting the confusion matrix. To achieve this, you have to pass the keyword colorbar=False.

plot_confusion_matrix(estimator, X, y, colorbar=False)Conclusion

This post lists some of the highlight changes in the latest scikit-learn update (v0.24). The complete list of changes is available here. It was the last update for version 0. The next version will be 1.0. If you are interested in knowing the highlight features in the previous version (v0.23), check out my earlier post: